Code

library(tidyverse)

library(bggjphd)

theme_set(theme_bggj())Using R and FTP to programmatically fetch a large amount of data for processing

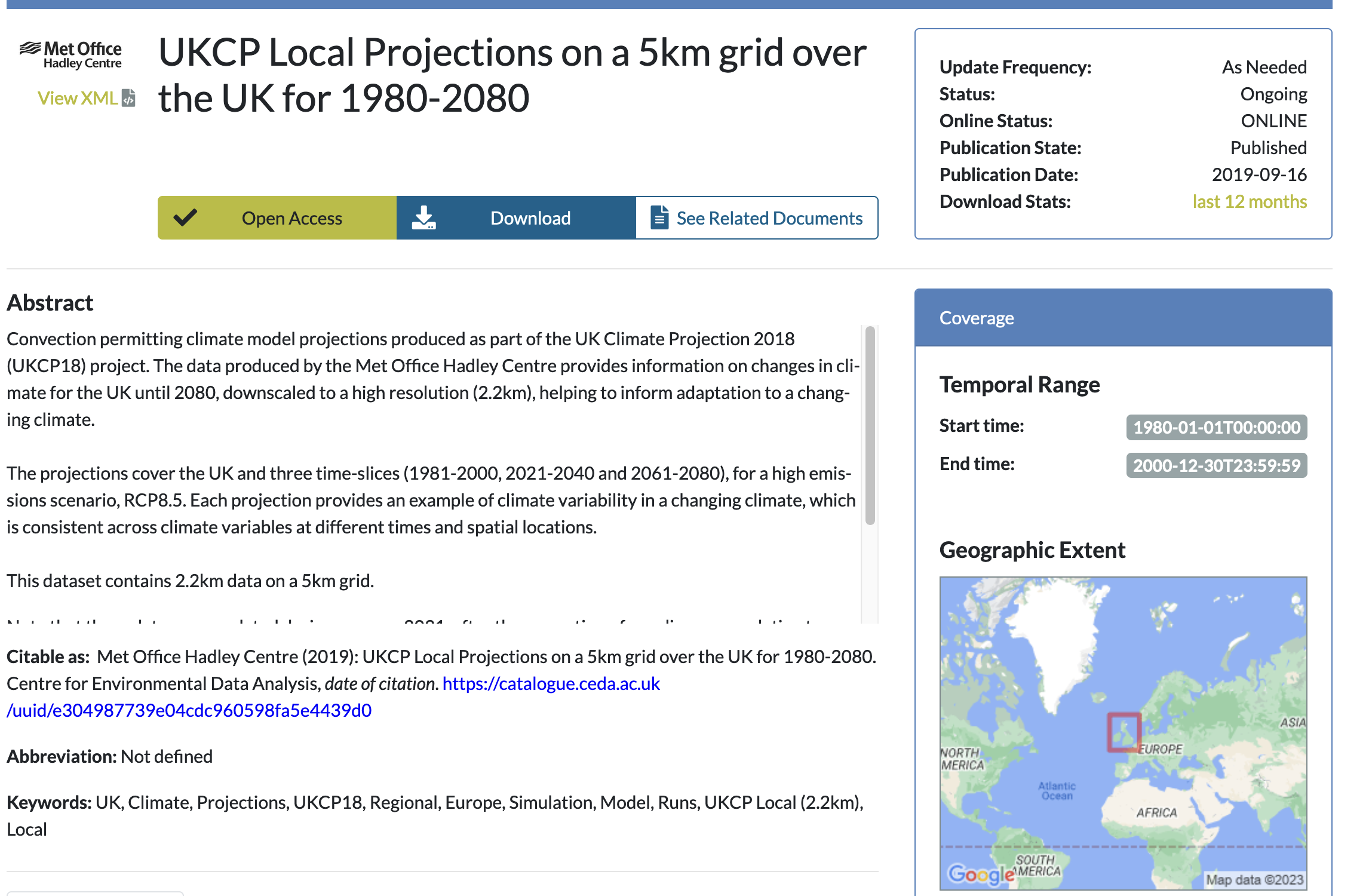

For my PhD modeling I needed to fetch a large amount of data from the CEDA Archive, specifically I use hourly precipitation projections from UKCP Local Projections on a 5km grid over the UK for 1980-2080. The hourly precipitation projections are stored in 720 files that are all approximately 120mb to 130mb. Here I write out my processing in case someone needs help with doing something similar.

June 28, 2022

For my PhD modeling I needed to fetch a large amount of data from the CEDA Archive FTP server, specifically I use hourly precipitation projections from UKCP Local Projections on a 5km grid over the UK for 1980-2080. The hourly precipitation projections are stored in 720 files that are all approximately 120mb to 130mb.

I could go through the list of files and click on each one to download it, but being a lazy programmer that’s not good enough, so I wrote a program that

The hardest part was getting my FTP connection to work, so I thought I might write out my process so that some future googlers might find this post.

The first things you’re going to need are the following:

In my case the 720 files are located at

We’re going to need to input our username and password into the URL to download the data. In order to hide my login info when coding I put it in my R Environment (easy to edit with usethis::edit_r_environ()) and can thus write a function to input it in requests. I never assign my info to variables, but rather just use functions to input them.

Now we can send a request to the FTP server in order to get a list of all the files we want to download

As you can see below, the result is given to us as one long string.

We get a single string with all the file names. It’s easy to split them up into separate strings ands remove the trailing empty line.

[1] "pr_rcp85_land-cpm_uk_5km_01_1hr_19801201-19801230.nc"

[2] "pr_rcp85_land-cpm_uk_5km_01_1hr_19810101-19810130.nc"

[3] "pr_rcp85_land-cpm_uk_5km_01_1hr_19810201-19810230.nc"

[4] "pr_rcp85_land-cpm_uk_5km_01_1hr_19810301-19810330.nc"

[5] "pr_rcp85_land-cpm_uk_5km_01_1hr_19810401-19810430.nc"

[6] "pr_rcp85_land-cpm_uk_5km_01_1hr_19810501-19810530.nc"Now comes the tricky part. We are going to download 720 files (one for each month) that are around 120MB each. If we just download them and keep them on our hard drive that’s going to be upwards of 70GB. Instead of doing that we will use the function process_data() below to do the following:

Before we can iterate we will need to create a new helper function. Since we will now be using download.file() to download our data sets, we need to input our username and password into the URL. As before, in order to not reveal our information we use functions instead of creating global variables in the environment. Thus we won’t accidentally leak our information when for example taking screenshots.

The data files are stored in .nc form. The ncdf4 package lets us connect to these kinds of files and pull in the variables we need.

process_data <- function(filename) {

Sys.sleep(0.1)

from_to <- stringr::str_extract_all(filename, "_[0-9]{8}-[0-9]{8}")[[1]] |>

stringr::str_replace("_", "") |>

stringr::str_split_1("-")

from <- as.Date(from_to[1], format = "%Y%m%d")

to <- from + lubridate::months(1, abbreviate = FALSE) - lubridate::days(1)

tmp <- tempfile()

download.file(

make_download_path(filename),

tmp,

mode = "wb",

quiet = TRUE

)

temp_d <- ncdf4::nc_open(tmp)

max_pr <- ncdf4::ncvar_get(temp_d, "pr") |>

apply(MARGIN = c(1, 2), FUN = max)

lat <- ncdf4::ncvar_get(temp_d, "latitude")

long <- ncdf4::ncvar_get(temp_d, "longitude")

out <- tidyr::crossing(

proj_x = 1:180,

proj_y = 1:244,

from_date = from,

to_date = to

) |>

dplyr::arrange(proj_y, proj_x) |>

dplyr::mutate(

precip = as.numeric(max_pr),

longitude = as.numeric(long),

latitude = as.numeric(lat),

station = row_number()

)

out

}Having defined our function we throw it into purrr::map_dfr() (map_dfr() tells R that the output should be a dataframe in which the iteration results are concatenated rowwise) for iteration and say yes please to a progress bar. I could have used the furrr package to reduce the time by downloading multiple files in parallel, but I was afraid of getting timed out from the CEDA FTP server so I decided to just be patient.

Having created our dataset we write it out to disk using everyone’s favorite new format parquet. This way we can efficiently query the data without reading it into memory using arrow::open_dataset().

This whole process took 3 hours and 21 minutes on my computer. The largest bottleneck by far was downloading the data.

I mentioned above that I only needed the yearly data, but currently the dataset contains monthly maxima. Since I might need to do seasonal modeling later in my PhD I decided it would be smart to keep the monthly data, but it’s also very easy to further summarise the data into yearly maxima.

Since the data for 1980 contain only one month, I decided to not include that year as it is not really a true yearly maximum.